Because of its simplicity and ease of use, Python has become the most popular programming language among the developer community. Pertaining to its popularity, it has also found a great support in the Artificial Intelligence community with the implementation of high-level frameworks to run complex models easily. There has been tremendous development in domains such as computer vision & natural language processing and python libraries have caught up well with the latest advancements.

If you've seen one of this year's most recent technical advances, the "Tesla Bot", you'll understand how far AI has progressed. The Tesla Bot is similar to a humanoid robot that will ultimately live with us and speak with us in the same way that we interact with other humans. The ability to understand our terminology and answer in an understandable manner is nothing short of amazing, and Natural Language Processing systems play a crucial in enabling these capabilities.

Source

Source

In this post, we'll look at some of the best Python libraries designed for Natural Language Processing.

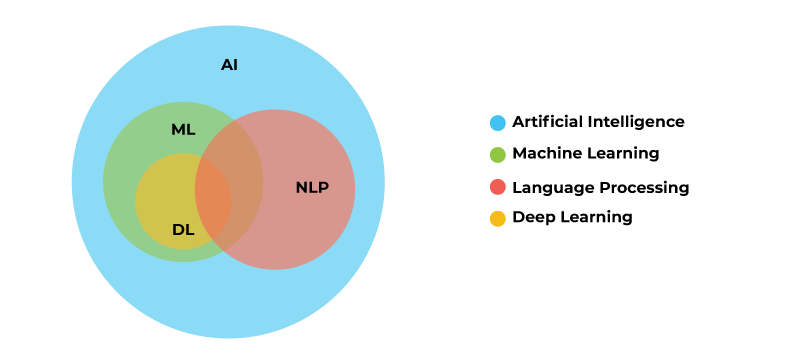

What is NLP?

Natural Language Processing (NLP) is a wide topic that falls under the umbrella of Artificial Intelligence (AI) technology. As a result of NLP, computers are able to interpret text and spoken words in a similar manner to how humans do it. To get the intended outcome, NLP must be able to understand not just words but also phrases and paragraphs in their context based on syntax, grammar, etc. NLP algorithms separate the human language into machine-understandable lumps that can be used to build NLP-based software.

Today, NLP is finding applications across the different industrial landscape, thanks to the creation of helpful NLP libraries. In fact, NLP is now an essential component of Deep Learning development. Among other NLP applications, extracting relevant information from text is critical for building chatbots, virtual assistants because to train the NLP algorithms a huge amount of dataset is required for better performance but our Google Assistant and Alexa are becoming more natural day-by-day.

Python Libraries for NLP

Previously, natural language processing could only be accomplished by experts using a standard training strategy; in addition to mathematics and machine learning, students needed to be familiar with numerous key linguistic concepts. Previously developed NLP tools are now available to assist with text preparation, and they are frequently utilised by the community. So that we may concentrate on creating models and improving parameters. The primary goal of NLP libraries is to make text preparation simpler.

Source

Source

There are a variety of tools and packages that can be used to solve NLP problems. Several of the most popular natural language processing programs will be covered today, based on my own experience with these tools and their capabilities. It's important to note that my library selections only partially overlap in their functions before we go into it. It's not always easy to create a direct comparison. My comparisons will be limited to those libraries that allow us to do so, as we investigate various functionalities.

To tackle NLP challenges, a number of tools and packages are available. Today, we'll go through a few of the most useful python libraries for natural language processing. Before we begin, it's crucial to understand that this library list only partially overlap in their roles. There is no direct comparison between them and these are not mentioned in order of how effective they are for NLP.

Here are some of the NLP python libraries:

- NLTK

- spaCy

- Gensim

- Core NLP

- Pattern

- Polyglot

- Text Blob

- AllenNLP

- Hugging Face Transformers

- Flair

Now, let us go through a brief introduction for each one of them.

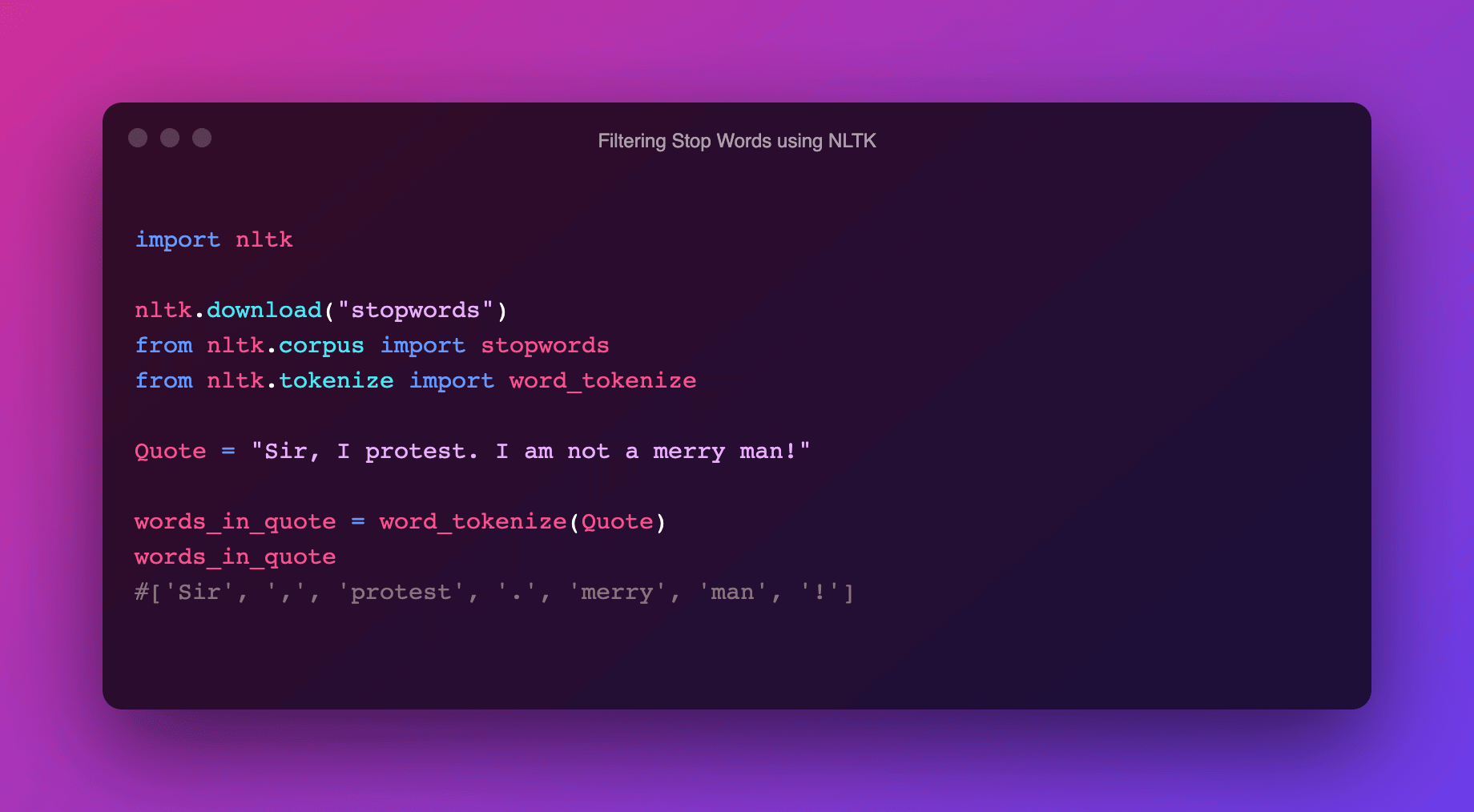

1. NLTK (Natural Language Toolkit)

Natural Language Toolkit is one of the most frequently used libraries in the industry for building Python applications that interact with human-language data. NLTK can assist you with anything from splitting sentences from paragraphs to recognising the part of speech of specific phrases to emphasising the primary theme. It is a highly important tool for preparing text for future analysis, such as when using Models. It assists in the translation of words into numbers, with which the model may subsequently function. This collection contains nearly all of the tools required for NLP.

NLTK Features:

- Helps with text classification

- Helps with tokenization

- Helps with parsing

- Helps with part-of-speech tagging

- Helps with stemming

NLTK is one of the most well-known complete library for NLP and offers a plenty of approaches for each NLP task. Not only that, it also supports a significant number of languages.

But if you are a beginner then it can be complicated to learn and use.

NLTK can be installed easily using:

pip install nltk

Here is a quick example for how you can use NLTK to tonize sentences:

>>> import nltk

>>> sentence = """I need a cup of hot coffee"""

>>> tokens = nltk.word_tokenize(sentence)

>>> tokens

['I', 'need', 'a', 'cup', 'of', 'hot', 'coffee']

Check official documentation for more information here.

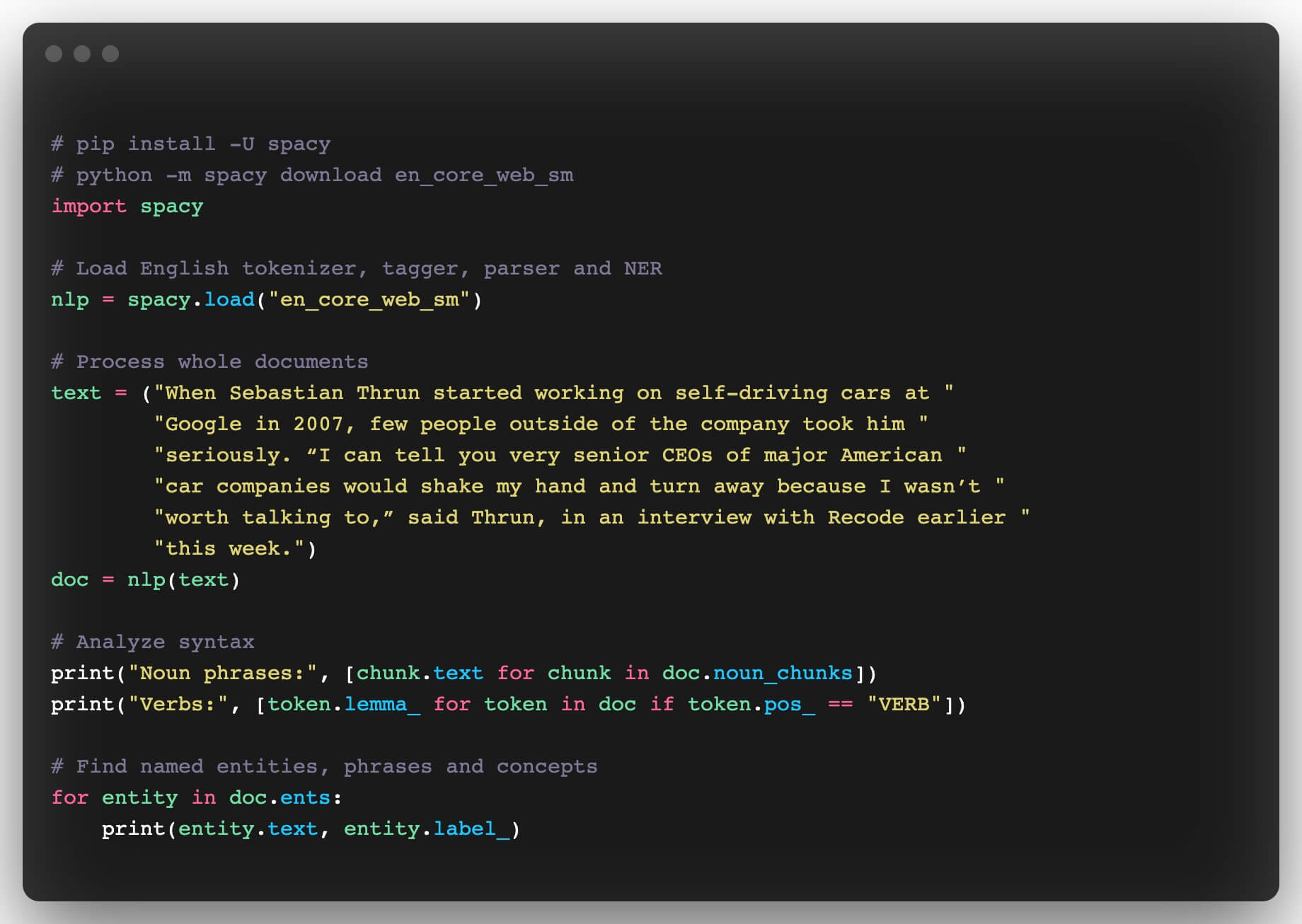

2. spaCy

spaCy is a python library built for sophisticated Natural Language Processing. It is based on cutting-edge research and was intended from the start to be utilized in real-world products. spaCy has pre-trained pipelines and presently supports tokenization and training for more than 60 languages. It includes cutting-edge speed and neural network models for tagging, parsing, named entity identification, text classification, and other tasks, as well as a production-ready training system and simple model packaging, deployment, and workflow management.

spaCy offers the ability to use neural networks for training and provides built-in word vectors.

Here is a quick example for loading the English tokenizer, tagger, parser, and NER and processing the text to create noun phrases, verbs, entity text, and labels:

Source

Source

Check official documentation for more information here.

3. Gensim

Gensim is a well-known Python package for doing natural language processing tasks. It has a unique feature that uses vector space modeling and topic modeling tools to determine the semantic similarity between two documents.

Because all algorithms in GenSim are memory-independent in terms of corpus size, it can handle input bigger than RAM. It includes methods such as the Hierarchical Dirichlet Process (HDP), Random Projections (RP), Latent Dirichlet Allocation (LDA), Latent Semantic Analysis (LSA/SVD/LSI), and word2vec deep learning, which are extremely effective in natural language problems. The processing speed and excellent memory use optimization of GenSim are its most sophisticated features.

- Gensim is able to work with large datasets and processes data streams

- It also provides tf.idf vectorization, word2vec, document2vec, latent semantic analysis, latent Dirichlet Allocation

Gensim can be used to:

- Find text similarities

- Convert words and documents to vectors

- Summarize text

Gensim can be installed easily using:

pip install gensim

For installation and usage of the library, you can check out the official GitHub repo here.

4. Core NLP

CoreNLP can be used to create linguistic annotations for text, such as:

- Token and sentence boundaries;

- Parts of speech;

- Named entities;

- Numeric and temporal values; dependency and constituency parser;

- Sentiment;

- Quotation attributions;

- Relations between words

CoreNLP supports a variety of Human languages such as Arabic, Chinese, English, French, German, Spanish. It is written in Java, but has support for Python as well. CoreNLP's heart is the pipeline. It takes raw text, passes it through a series of NLP annotators, and produces a final set of annotations.

To get started, check out their official GitHub repo here.

5. Pattern

Pattern is a python based NLP library that provides features such as part-of-speech tagging, sentiment analysis, and vector space modeling. It offers support for Twitter and Facebook APIs, a DOM parser and a web crawler. It is mostly used for web mining and thus, it may not be sufficient for other natural language processing projects.

Pattern is often used to convert HTML data to plain text and resolve spelling mistakes in textual data.

You can check out their official GitHub repo here.

6. Polyglot

Despite its lesser-known reputation, we consider this NLP library to be one of our favourites since it provides an impressive breadth of analysis and covers a wide range of languages. NumPy enables it to be incredibly fast. Polyglot's SpaCy-like efficiency and ease of use make it an excellent choice for projects that need a language that SpaCy does not support. The polyglot package provides a command-line interface as well as library access through pipeline methods.

Features of Polyglot:

- Tokenization (165 languages)

- Part of Speech Tagging (16 languages)

- Sentiment Analysis (136 languages)

- Word Embeddings (137 languages)

- Language Detection (196 languages)

- Name Entity Recognition (40 languages)

Check out the official documentation here.

7. TextBlob

TextBlob is a python library that is often used for natural language processing (NLP) tasks such as voice tagging, noun phrase extraction, sentiment analysis, and classification. This library is based on the NLTK library.

Its user-friendly interface provides access to basic NLP tasks such as sentiment analysis, word extraction, parsing, and many more. It's ideal for easy NLP tasks and also if you're just getting started.

Features:

- Helps with spelling correction

- Helps with noun phase extraction

It supports a large number of languages (16-196) for different tasks.

It is often used for spelling correction, sentiment analysis and translation & language detection.

For installation and usage of the library, you can check out the official documentation here.

8. AllenNLP

In terms of Natural Language Processing Tools, AllenNLP is one of the most advanced ones available today. It houses a collection of tools and libraries based on PyTorch's utilities. It's perfect for business and research. Making a model with AllenNLP is considerably easier than making one from scratch with PyTorch.

Features offered by AllenNLP include:

- Classification tasks

- Helps in text + vision multimodal tasks such as Visual Question Answering (VQA)

- Sequence tagging

- Pair classification

AllenNLP offers very exhaustive NLP capabilities but it needs to be optimized for speed.

For installation and usage of the library, you can check out the official documentation here.

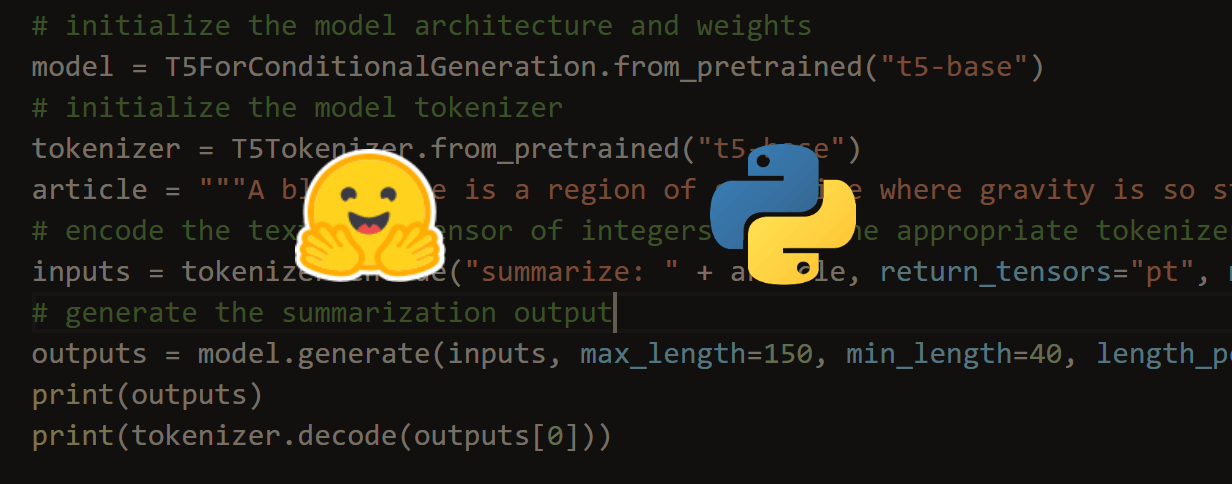

9. Hugging Face Transformers

Hugging Face is one of the most widely used libraries in NLP community. It provides native support for PyTorch and Tensorflow-based models, increasing its applicability in the deep learning community.

BERT and RoBERTa are two of the most valuable models supplied by the Hugging Face library, which is used for machine translation, question/answer activities, and many other applications. Hugging Face pipeline provides a rapid and simple approach to perform a range of NLP operations, and the Hugging Face library also supports GPUs for training. As a result, processing speeds are multiplied by a factor of ten.

Source

Source

Hugging Face Library is often used for:

- Text generation

- Question Answering tasks

- Machine Translation

- Sentiment Analysis

You may simply get started with hugging face library for nlp here.

10. Flair

Flair supports an increasing number of languages, you may apply the latest NLP models to your text, such as named entity recognition, part-of-speech tagging, and classification, as well as sense disambiguation and classification. It is a deep learning library built on top of PyTorch for NLP tasks.

Flair natively provides pre-trained models for NLP tasks such as:

- Text classification

- Part-of-Speech tagging

- Name Entity Recognition

Flair also supports custom models. Full documentation available here.

Conclusion

Computers can now comprehend text or human languages easily, owing to developers who have contributed to natural language processing by offering simple tools and frameworks. Python has proven to be capable of adapting to a wide range of contemporary computational challenges that have plagued developers in the past. Python programmers can develop exceptional text processing apps and aid their organisations in getting vital insights from text data as a consequence of Python's extensive NLP libraries.

The key to selecting a particular NLP python library for your projects or tasks is knowing which features are available in it and how they relate to one another as part of one package.