If you're a photographer or work in the film industry, you're probably acquainted with super slow motion, but you probably won't know how it works. Today we will learn how slow motion works and how to make your own slow motion videos using deep learning.

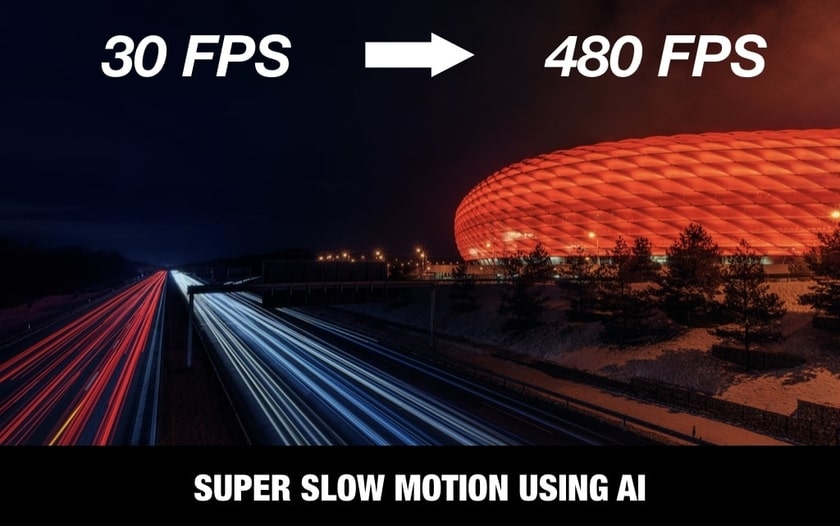

Higher FPS for Slow motion videos

FPS refers to the number of frames captured in a second and it is one of the most important aspects in a recorded video. More frames translate to more information to view and vice versa.

If you have ever tried the Super Slow-mo function in your smartphone or watched a slow motion video, you’d have observed that the scene is recorded at approximately 960 frames per second (fps), which is four times quicker and it is played back at 30 frames per second which is 32 times slower than normal, thus the motion for the moment in recorded footage appears to be really slow.

To understand the concept better, below is a skateboarding scene at different frame rates.

At normal frame rate:

At a much higher frame rate:

Source

Source

You must be thinking about the cost of expensive cameras to record such slow motion videos. However, due to the advancements and deep research in computer vision space, we now have various easy and inexpensive techniques for getting more frames per second (FPS), using Deep Learning and Video Frame Interpolation.

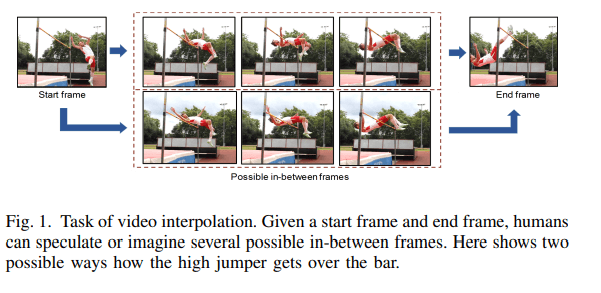

What is Video Frame Interpolation?

In order to improve the video's quality and sharpness, video frame interpolation intelligently restores missing video frames between the original videos which gives a better viewing experience and it also increases the frames per second. VFI is mainly used in Computer Vision algorithms.

Source

Source

Traditional Video Frame Intersection Technology

Let us now explore how video interpolation used to be done traditionally using Video Frame Intersection Technology. Some of the examples are.

1. Frame Mixing: Frame mixing is a technique for increasing the transparency of the front and back keyframes before combining them in a new frame. The result is not excellent, but the original keyframe is merely converted into a transparent shape, and when the moving object contour overlaps the front and rear frames, it produces a blurred image, and the visual effect fluency of the movie is only slightly enhanced.

2. Frame Sampling: Keyframes are crucial frames that provide information about the start and finish of a sequence. A keyframe informs you two things: first, what your frame's action is at a given point in time, and second when that action takes place in your frame. As a result, each keyframe will be displayed for a longer period of time, which is comparable to no frame other than greater frame rates and larger file sizes, it will not increase the visual quality. As a result, frame sampling uses fewer resources and it is fast too.

3. Optical Flow Method: In the field of computer vision, optical flow is one of the most significant research directions to follow. This algorithm identifies pixel movement from the top and bottom frames by comparing them and automatically creating new frames in between. Each pixel in an image acquired by our cameras undergoes some kind of change when we move the camera. To do this, "Optical flow" analyses the pixels to see how they are affected by the expected motion, and then creates new frames based on the new information.

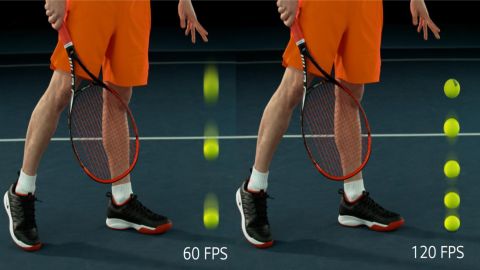

4. Motion Compensation: In order to detect the moving block, the difference between two frames in both horizontal and vertical directions is used as the basis for motion estimation and motion compensation (MEMC). Through the analysis of the motion of the picture block, it is possible to calculate the intermediate frames to increase the video frame rate and provide the audience a smoother image, MEMC is mostly used in TV and Mobiles.

Professional video producers using expensive camera equipment are no longer the only ones who can record slow motion videos. Our cellphones and action cameras are now capable of capturing a slow motion video in real time or transforming a captured video to slow motion using various frame interpolation techniques. Researchers have now started to use the traditional Video Frame Intersection Technology and apply deep learning computer vision assistance to it for improving the output quality and to make it appear more natural.

A recent example of MEMC used in smartphone cameras:

Research work for Super Slow Motion using Deep learning:

Below we have listed some of the most recent and advanced studies and research papers that have successfully implemented deep learning to transform a video to slow motion.

1. Super SloMo: High-Quality Estimation of Multiple Intermediate Frames for Video Interpolation

By: Jiang H., Sun D., Jampani V., Yang M., Learned-Miller E., and Kautz J.

Research Paper Implementation Code

Given two successive frames, video interpolation aims to generate intermediate frames that can be used to form spatially and temporally coordinated video sequences. In this paper, they introduce an end-to-end multi-frame video interpolation method that uses a convolutional neural network.

They started by calculating bi-directional optical streams between the input images. We then combine these flows at each step. Although they can be linearly combined, they tend to produce artifacts around motion limits.

After that, they use a U-Net architecture to refine the flow and predict soft visibility maps. After the two input images are fused, the resulting intermediate frames are computed linearly.

Since the network's learning algorithm is time-dependent, it can produce many intermediate frames as needed. We use over a thousand video clips with 240-fps frames.

Get started with this research paper's code implementation on GitHub.

2. Time Lens: Event-based Video Frame Interpolation

By: Stepan Tulyakov, Daniel Gehrig, Stamatios Georgoulis, Julius Erbach, Mathias Gehrig, Yuanyou Li, Davide Scaramuzza, Huawei Technologies, Zurich Research Center; Dept. of Informatics, Univ. of Zurich and Dept. of Neuroinformatics, Univ. of Zurich and ETH Zurich

Research Paper Implementation Code

This method generates intermediate frames by referring to the object motions in the image. Although first-order approximations are commonly used for estimating optical flow, they can only be used in highly dynamic scenarios. Event cameras provide auxiliary visual information to the user by measuring per-pixel brightness changes.

While flow-based and synthesis-based approaches can capture non-linear movements, they tend to perform poorly in low-quality regions. In this work, they introduce Time Lens and an equal contribution method that leverages the advantages of both flow-based and synthesis-based approaches.

They present their method on several synthetic and real benchmarks, where they show an improvement of 5.21 dB in terms of PSNR. They also released a large-scale dataset that aims to push the envelope of existing methods.