Flow edge guided video completion:

Made By:

Chen Gao Ayush Saraf Jia-Bin Huang Johannes Kopf

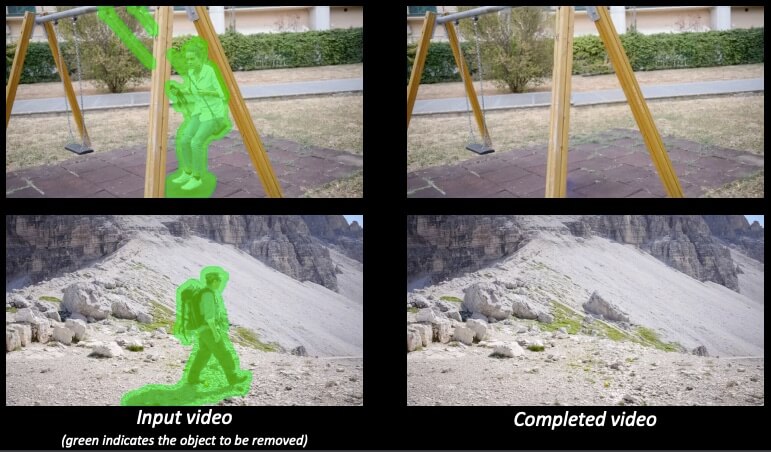

The Flow edge guided video completion project allows users to seamlessly remove objects and watermarks from casually captured videos. Not just that, it also offers the ability to expand the field of view for a video.

This project has been developed by Chen Gao, Ayush Saraf, Jia-Bin Huang, Johannes Kopf along with Virginia Tech and Facebook research team.

Compared to previous flow completion methods, this approach is able to retain and preserve the sharpness of motion boundaries resulting in very clean object removals.

How does it work?

Video completion is the task of filling a given space-time region with newly synthesized content. To properly remove objects and watermarks from a video, the newly generated content should embed seamlessly in the video, and the alteration should be as imperceptible as possible.

There are a few challenges associated with video completion, mainly:

- To ensure that the result is temporally coherent (does not flicker)

- Respects dynamic camera motion as well as complex object motion in the video.

Most methods used patch-based synthesis techniques which were often slow and had limited ability to synthesize new content because they can only remix existing patches in the video.

Flow based approach has been quite successful in video completion problem because it synthesizes color and flow jointly and propagates the color along flow trajectories to improve temporal coherence, which alleviates memory problems and enables high-resolution output. The above mentioned flow guided video completion method also follows a similar approach.

But previous flow based approaches falter in complex situations due to their way of working. For example, it propagates color values directly. However, the colors often change with every scene in the video due to , shadows, lens vignetting, auto exposure, and white balancing. This leads to artifacts.

The Flow guided video completion method alleviates such limitations by providing the following solutions:

- Flow edges: By explicitly completing flow edges, it obtains piecewise-smooth flow completion.

- Non-local flow: It helps handle regions that cannot be reached through transitive flow (e.g., periodic motion, such as walking)

- Seamless blending: Avoid visible seams in the results through operating in the gradient domain.

- Memory efficiency: Handles videos with up to 4K resolution, while other methods fail due to excessive GPU memory requirements.

Who can benefit from this project?

If you want to remove an object from a motion video easily or want to remove watermarks then this solution works really efficiently with very less memory footprint. Also, if you want to extend the field of view for a video then again this flow based approach helps in extending the view and add content automatically.

Demo:

Research information:

The Complete Research Paper is available here: Flow Guided Video Completion.

Want GPU Instances at 5X lower cost?

If yes, then sign up: 🙌

Get access

Try it yourself:

A demo is coming soon with code implementation. Stay tuned!

Get your AI project featured

Fill out this Google Form and get a chance to be featured in this growing AI community